Incorporating human gestures for communications between humans and robots with gestures allows for easier interactions without the need for physical contact. At the core of our gesture recognition is a Long Short-Term Memory (LSTM) artificial neural network (NN) optimized for deployment on a microcontroller. The NN is capable of recognizing gestures like swiping up and down, left or right with the glove’s hand only by analyzing its movements. For the recording of the gesture movements the two Inertial Measurement Units (IMUs) are placed on the thumb and the index finger of the glove.

Artificial Intelligence on the Glove

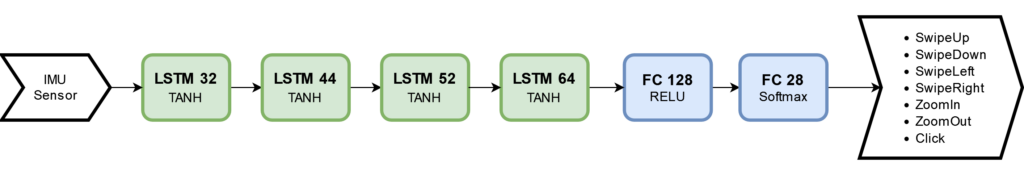

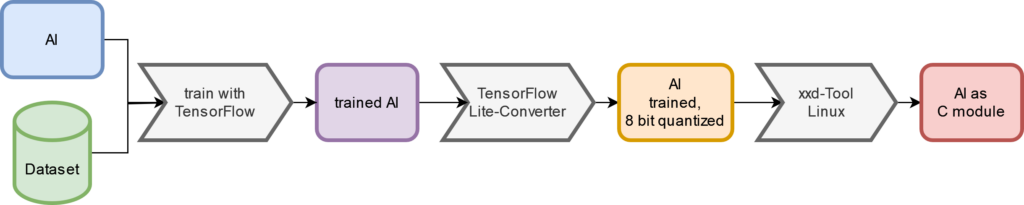

As already mentioned, the glove uses an LSTM model to classify gestures from movements. The architecture of the model can be seen in the Figure below. For inference on the microcontroller the machine learning (ML) framework TensorFlow Lite-Micro is used. It is a lightweight edge ML framework and comes with a model converter. This converter has some built-in basic but effective optimization methods such as quantization. After conversion, the model is a binary blob which is interpreted by the TensorFlow Lite-Micro edge framework.

Workflow

The workflow for an ML model is as follows: First, define a model architecture and train it with a given dataset using TensorFlow. Ensure that all ML methods used are convertible to a TensorFlow Lite model as well as supported by the edge framework. For more information on that, look at the TensorFlow Lite documentation. LSTMs for example have (per Q2 2022) no default implementation in the edge framework. To solve this issue, we used a third-party implementation and made some adoptions. In the second step of the workflow, the mode is converted and optimized using the TensorFlow Lite converter. As an optimization, an int8 quantization is applied. Before quantizing make sure that your model input data from the dataset is within a range that can be represented by int8 and that there are no outliers in the data. After conversion, the model can be saved as a C file which can be included in the firmware project. To be able to run inference on the edge device, the model needs to be loaded and memory must be allocated for the input and output tensors as well as for the inner layer tensors.

Specific Optimizations

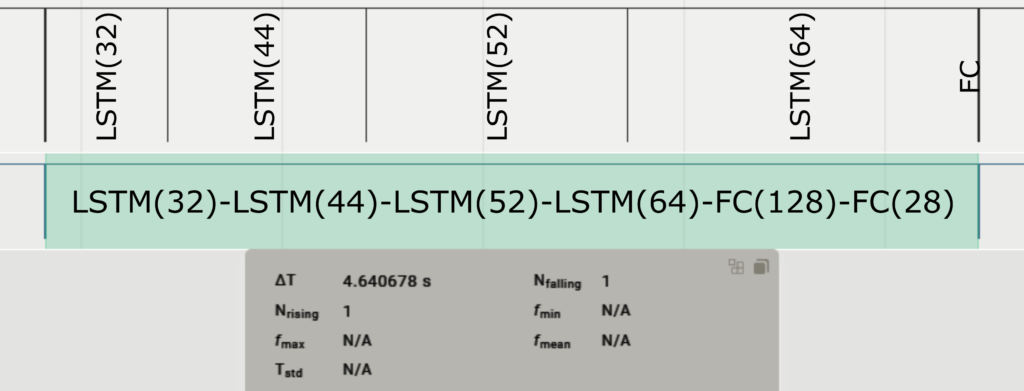

The model latency (model input to output) on the microcontroller, after the steps described above, was nearly 5 seconds. The following figure shows the timing of the model computation.

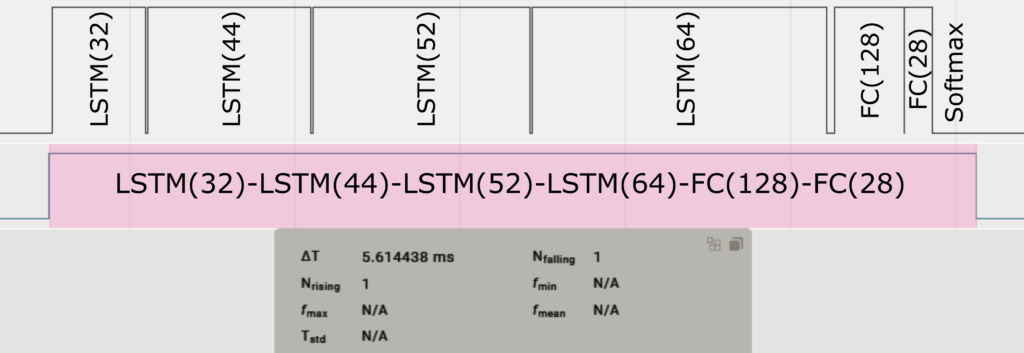

Since data needs to be processed with 50 Hz, optimizations are necessary. The first step was to use the ARM-specific instruction set to speed up all vector operations. As a result, the latency was reduced by more than a factor of 4. The second step taken was to use the state the LSTM cell has by design. Thereby only the new data point received from the sensors needs to be calculated instead of the whole data window. This optimization step brought down the model latency to less than 6 milliseconds, which is more than sufficient. For detailed information have a look at our papers (Online Handwriting Recognition, Online Gesture Recognition). The figure below shows the model computation timing after optimization.

Dataset

An LSTM classifier is a supervised ML model, thus for training a dataset is required. Unfortunately, we could not find someone with a similar setup. That is why we decided to record and label our own dataset. The dataset contains data for seven different gestures: Swipe-up, -down, -left, and -right, zoom-in, and -out, and click. For more details on our dataset have a look at our paper (Online Gesture Recognition).